Introduction

A lot is happening in UI. And I mean A LOT.

Text generation, image generation, audio & speech generation and even app generation. It feels like we are finally living in the future. We have unlocked immense capability, productivity and even creativity with these new tools, and everyone is scrambling to find the best way to use them. But that's the question: How do we use them?

AI's burgeoning potential is fundamentally changing how we interact with computers. In this article, we will explore the following:

How AI multimodality turns human-computer interaction on its head.

How Copilots have conquered the app space.

How multimodality is changing the way we communicate with machines.

What impact generative AI and agents will have on the future UIs?

Join me and find out how UI can harness this new-found AI power.

Let's dive in!

Understanding the User Interface

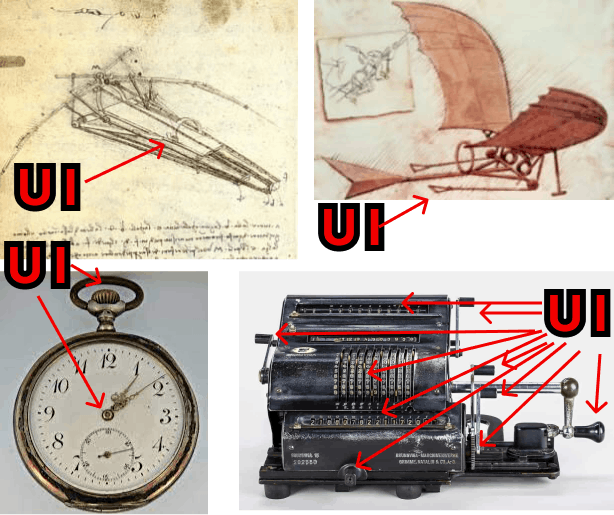

UIs have existed ever since we started building machines. Leonardo da Vinci's contraptions needed levers and straps to operate. Watches had hands to display the time and a crown to set it.

So, what are user interfaces exactly?

"UIs are the space where interactions between humans and machines occur." wikipedia

UIs act as a bridge between humans and the capabilities of their machines. The purpose of UI is to add simplicity and efficiency to human-machine interactions, helping users achieve their goals and make the experience engaging and accessible.

And as the machines evolved, so did the UIs.

I made a short storybook (with pictures 🖼) that outlines the evolution of UIs thus far:

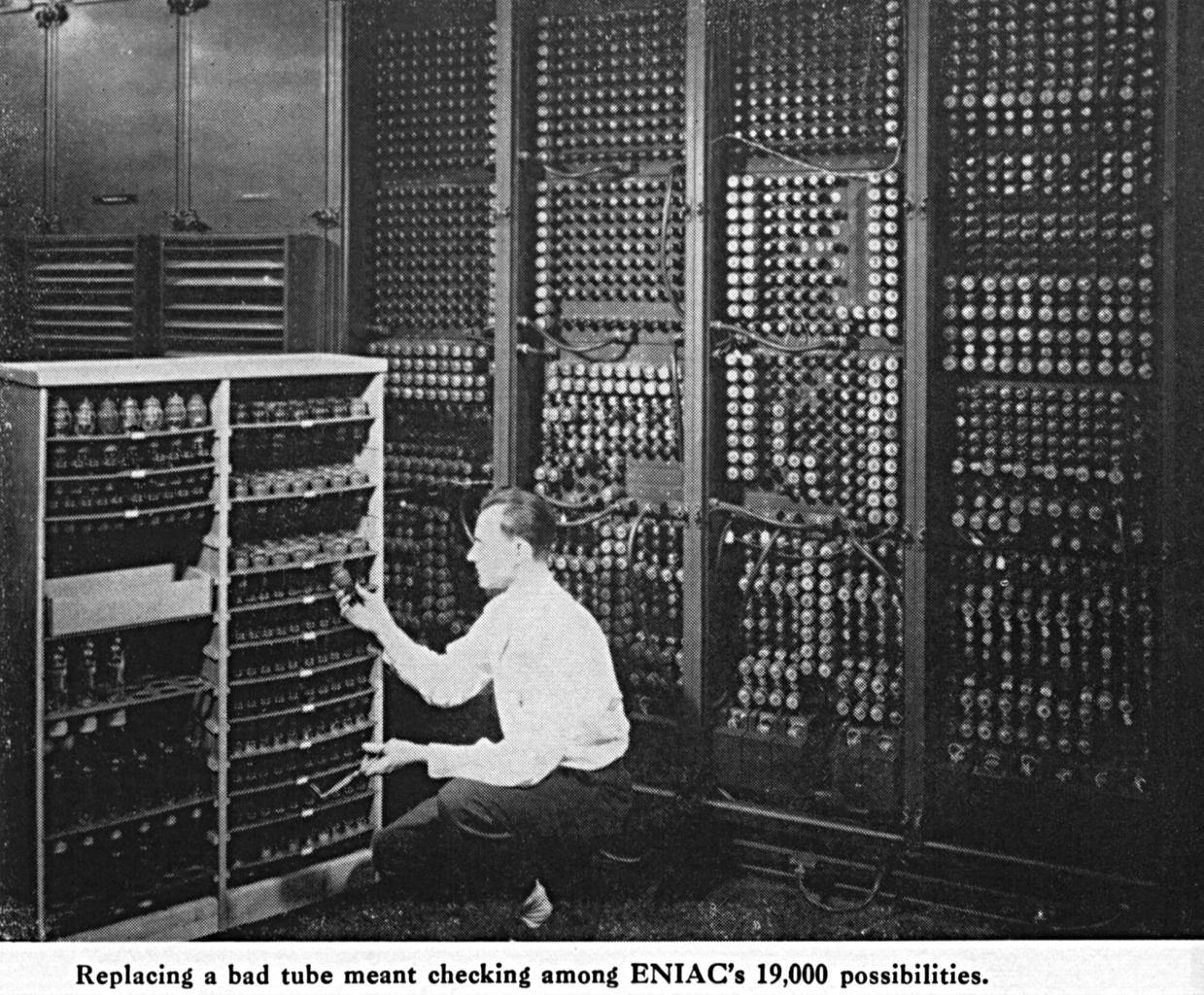

40s: Programming the first computers, such as the ENIAC, required laboriously adding and removing vacuum tubes from the machine. One would look at various lights on the computer to read the machine's output.

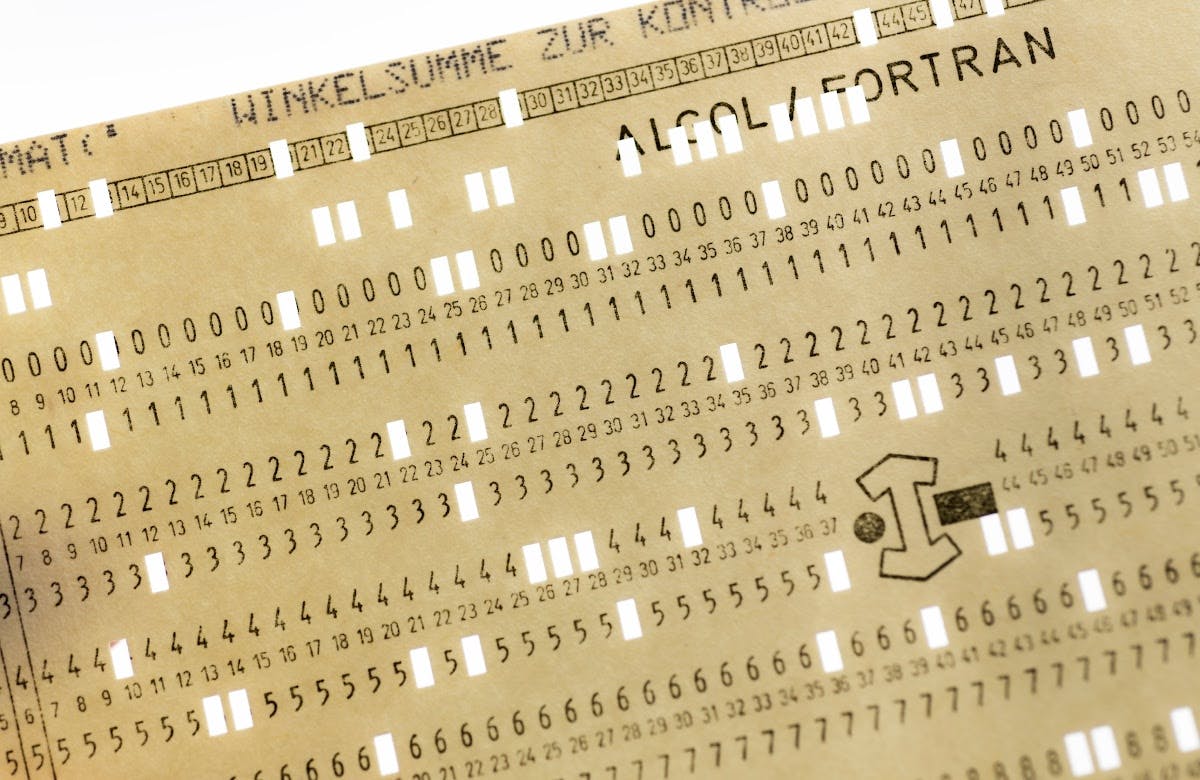

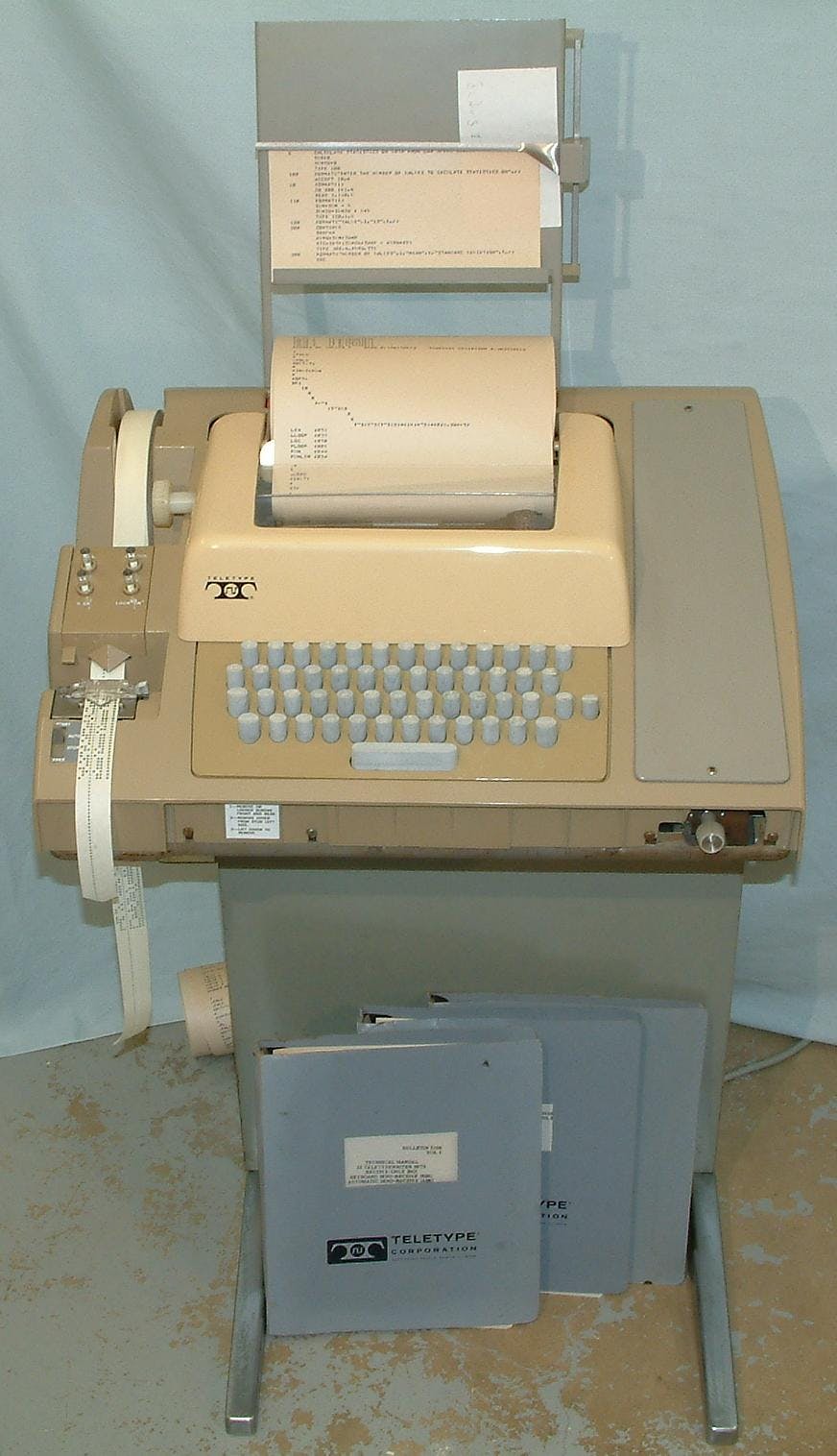

50s: Punch cards allowed the user to write programs away from the - often deafening - computer room. This also opened up collaboration and asynchronous work.

60s: Invention of the mouse. The Unix Command Line Interface (CLI) dates back to 1969 and remains one of the main ways we use computers today.

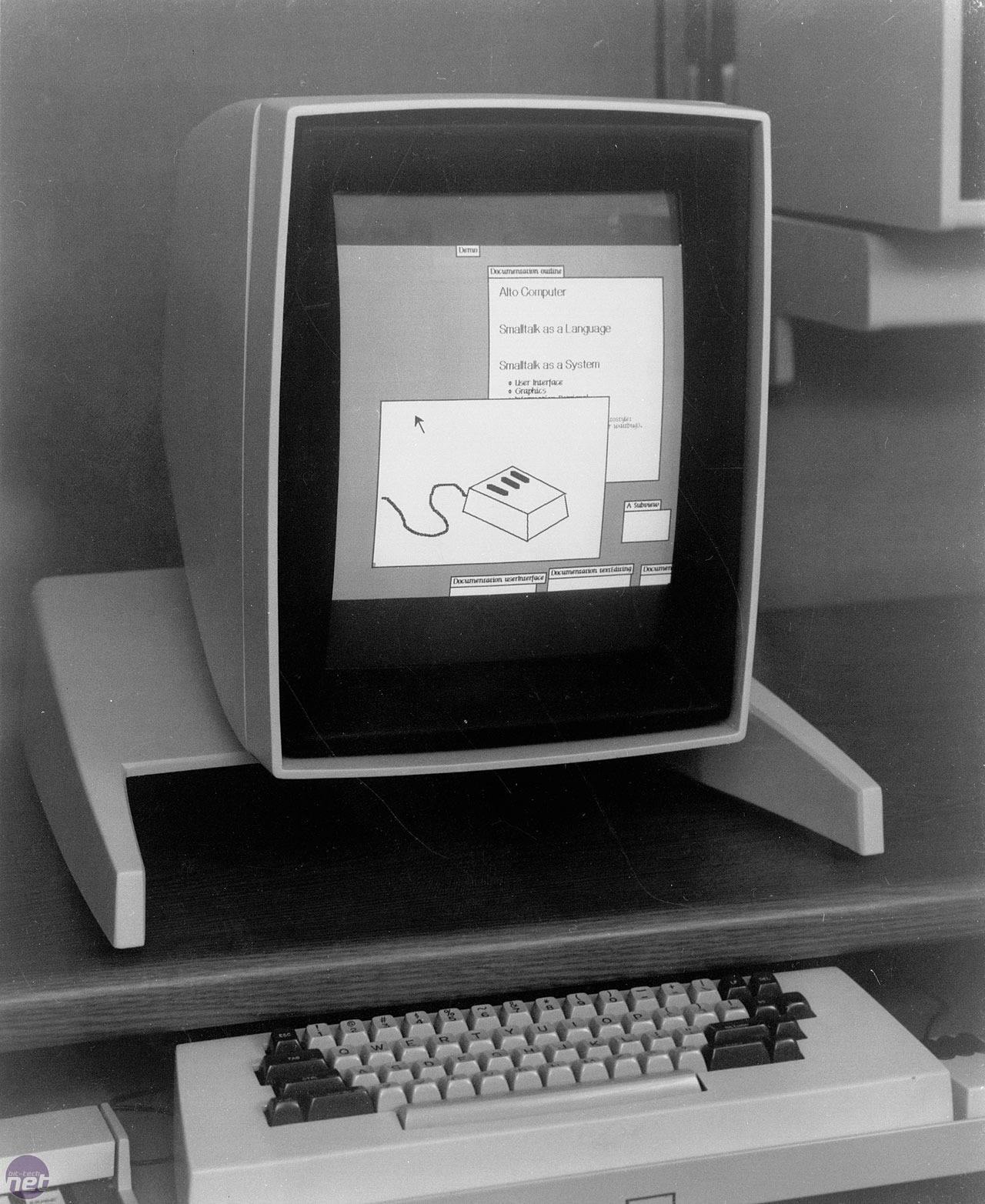

70s: Xerox PARC developed the Alto computer with the first graphical interface with icons. This ushered in the era of Video Display Terminals (VDTs). As a 21st-century citizen, I don't need to tell you how important screens will be.

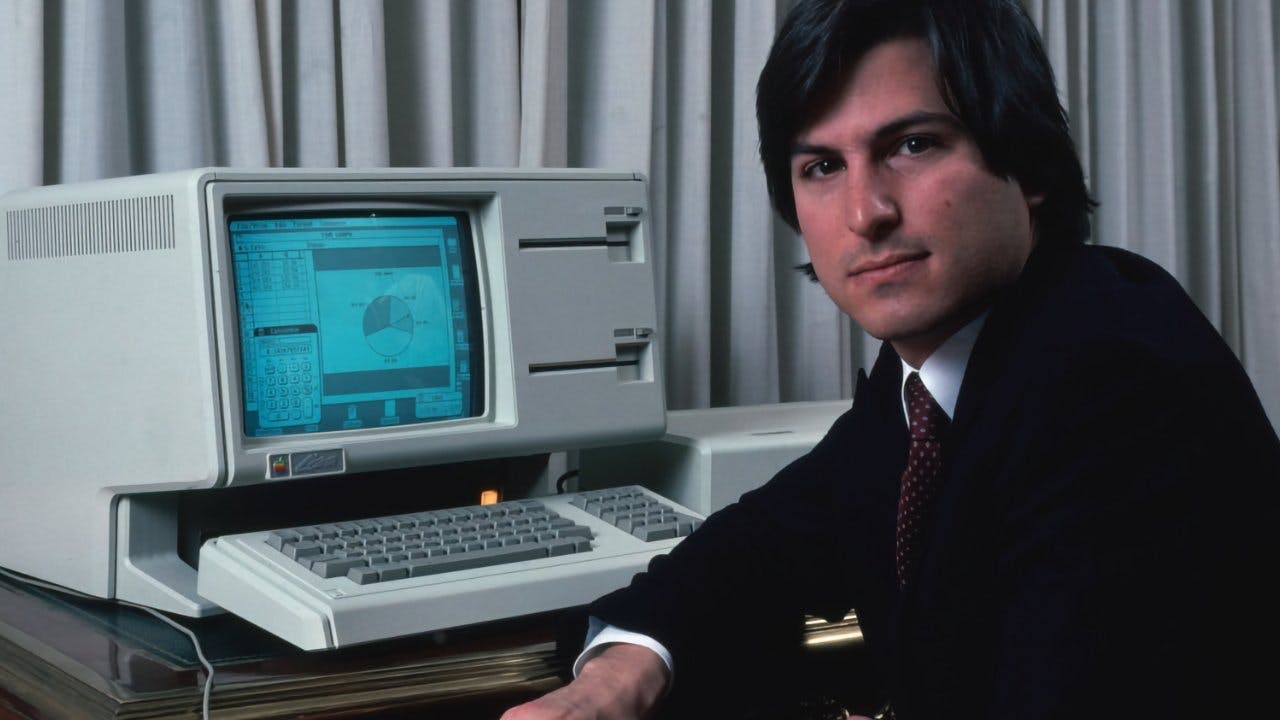

1983 Apple released the first commercial computer with a GUI, the Lisa.

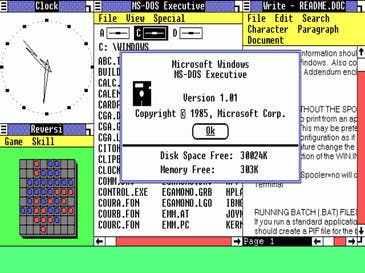

1985: Microsoft releases Windows 1.0, making GUIs mainstream.

2007: Apple releases iPhone, which introduces multi-touch GUI.

2010-2016: Siri, Alexa, and Google Assistant brought voice interfaces to the masses.

I paint this evolution in broad strokes, yet you can see how our communication with our machines has changed. What started by pulling levers, pushing buttons and replacing parts turned into clicking, tapping and speaking. Looking for lights around the device and decoding punch cards turned into icons, tables, text and images.

As our machines become more capable, the need for higher bandwidth communication increases. The last 100 years gave us a continuous increase in resolution, both on the output from and the input to the machine.

Understanding Humans

Tony Stark's digital assistant, J.A.R.V.I.S., from the 2008 movie "Iron Man" painted a beautiful picture of what would be possible if machines could understand us.

Iron Man and sci-fi stories like it, no doubt, inspired the 2010s incarnation of voice assistants like Siri, Alexa and Google Assistant. Despite the NLP technology that went into creating them, they didn't live up to that dream. It felt more like triggering keywords than a conversation.

When I first played around with GPT -3 in 2022, what struck me immediately was how well the computer was able to grasp what I meant to say. For the first time, the machine did the heavy lifting in the conversation. My input could have lousy spelling or incomplete sentences, but the computer filled the gaps with astounding competence. I knew immediately that Large Language Models (LLMs) will fundamentally change how we interact with computer systems.

Up to now, user interfaces were the answer to the question: "How do humans communicate with machines?"

From now on, user interfaces will answer the question: "How can machines communicate like humans?"

The first answer to this new question was undoubtedly chat.

The Rise of the Copilot

GPT -3 has been around since June 2020 but only really kicked off when ChatGPT was released in late 2022. Why was ChatGPT popular when GPT -3 wasn't? Yes, Reinforcement Learning with Human Feedback (RLHF) played a massive part in making the output more useful. But the other part of the equation is the chat interface.

From texting in the 90s to MSN in the 2000s to Facebook in the 2010s, chat has proven to be one of the mainstays of human communication. Asynchronous, fast, back-and-forth, and ever more expressive. The perfect medium to test the machine's new human-to-human level communication skills.

Chat Bots & Customer Service

A chat box inside the app connects you to a support agent where you need them. Placing support in the product itself can give the support agent valuable context on your problem. Pages you visited, actions you performed, the steps you got stuck on. Chat's back-and-forth nature helps zero in on the issue and solve it quickly.

But this time, no human is sitting on the other end of the line. A domain expert who can ingest all your browsing context as you go and immediately synthesize an answer to your question. No re-connecting to technical support, no language barriers, no wait time.

All this is now possible at scale. Offering this level of service dramatically increases the product's value and used to cost companies millions. Cutting costs while increasing value; no wonder incumbents are jumping at the opportunity.

Copilots started as chatbots. But now they are so much more.

Workspace Copilot

Copilots take support to the next level:

Support concerns itself with user issues and is by nature reactive.

The co-pilot is concerned with user objectives and is proactive.

This is a fundamental change in service. The copilot is your personal expert. Always available, always clued-in on what you're doing and trying to do.

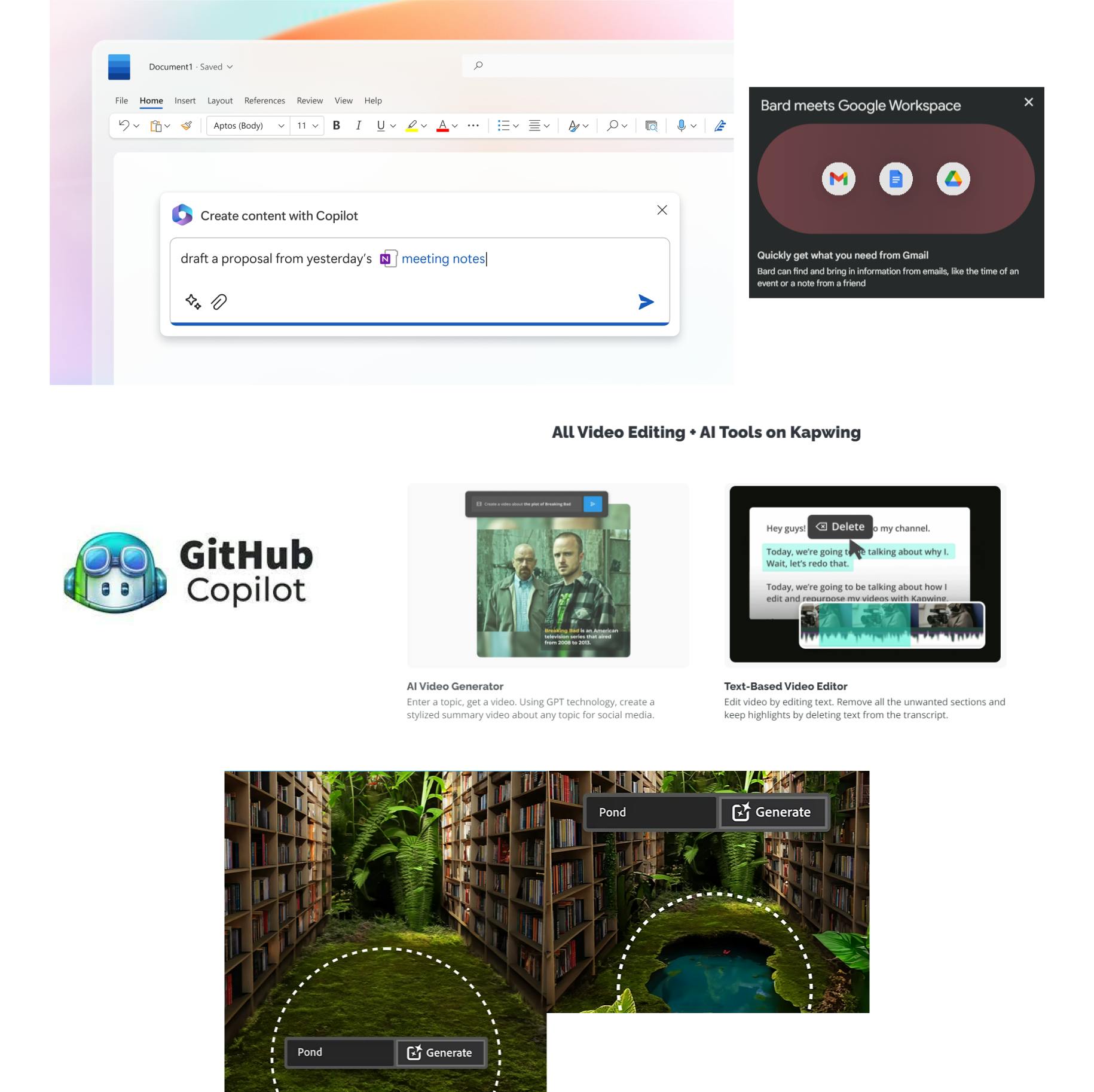

GitHub Copilot reads your code and predicts your next move. Just hit "Tab" to accept. This seemingly simple workflow saves developers countless hours. Its adoption in the developer community speaks for itself.

Microsoft 365 Copilot and Google Bard now plug directly into Documents, Sheets, Emails and more. Don't know how to plot a graph in Excel? Microsoft's copilot does. Want to know what's been going on in your inbox? Summarize it in Bard.

The Google search engine changed how we interact with information. Memorizing facts lost its value in a "just google it" world.

Copilots are starting to shift the way we use applications. Why take an Excel course if you can "just copilot it"?

Power to the Users

Again, we are shifting away from domain-specific, time-consuming, hard-to-learn skills. What matters in this new world of applications is user intent. The struggle of UI was to expose the right amount of surface area to the user such that a balance is struck between capability and ease of use. But now that we have AI as a translation layer between user intent and tool use, the user no longer needs to understand the tool itself.

App creators can now build tools of arbitrary complexity without regard for UX. And that is a good thing. Let me explain.

How many times have you used ipconfig in your terminal? Do you understand its output? I am speaking to a technical crowd, so perhaps you do. But does Carol from accounting who is wondering why her internet stopped working?

In our daily lives, we use only a fraction of what our machines are capable of. Many system features are not even accessible through menus because building UI is complex and expensive. But a copilot with access to those features can understand how and when to use them so Carol doesn't have to. This effectively increases the user's power without having to teach them or build UI. With a copilot, every user is now a superuser.

"Okay", I hear you ask, "What's the endgame here? Will the UIs of the future all converge to chat boxes?"

Oh, let me tell you, we haven't even started.

Multimodality

Let's take a step back to see where we are.

UIs try to solve human-machine interaction.

With LLMs, machines can now understand humans.

Chat's back-and-forth style allows humans to use natural language to describe their intent to a machine.

Copilots can use tools to perform actions. They combine context with user intent to produce solutions.

In the history section, I introduced the idea of increasing bandwidth. The more machines can do, the more ways we need to tell it what to do. However, AI's ability to translate is not restricted to human languages. It is becoming increasingly potent when translating between many different modalities:

Text

Image

Video

Audio

Code

The internet of today can barely be compared to its early days. It started out with only text. Soon, images, audio and videos joined the party, creating today's rich multimedia landscape. This bandwidth evolution is not only measured in bytes but also in meaning.

Learning to cook a steak from a YouTube video is far superior to reading only text. How long it takes, how brown it should get before it is taken out, and what sound it should make when it hits the pan. All this information is vital to the experience of cooking a steak, and we are now able to communicate that information due to increased bandwidth and meaning. AI is going through the same evolution now.

Input

Machines can now understand us and the world, regardless of how we communicate. Presenting our intent using images or video is likely more effective than text.

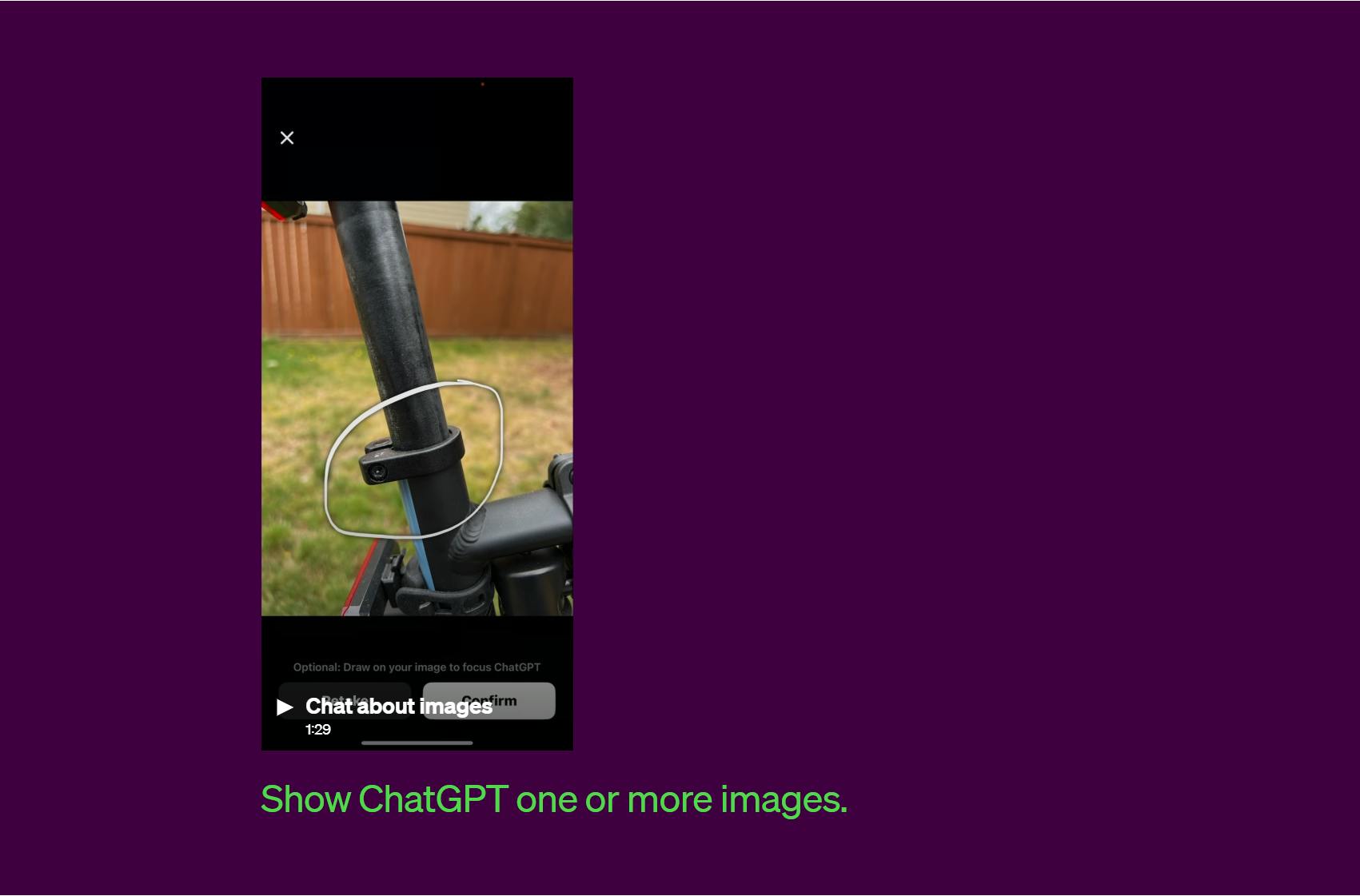

OpenAI is fully embracing multimodality. Snap a photo to point out your issue. Speak rather than write. Imagine how many problems have been solved by simply pointing and saying "This thing".

There is still untapped potential. For example, when conveying emotion to other humans, what we say is only a tiny fraction of the message. The rest is contained in the body language, the tone of voice and the facial expressions. What would an AI therapist be like if they could understand that? How effective could an AI salesperson be if they can pick up those cues? A human needs a different response based on their state of mind. AI can intelligently generate a more suitable response when it can capture a broader spectrum of our expression.

Output

ChatGPT can now also reply with images. It can write and run code. It can generate speech.

All of these new features are a MASSIVE deal for accessibility and convenience. In the car and can't use your hands? No problem. It will read the response out to you. Need to know what a carburettor looks like? No problem. It will create an image.

ChatGPT blew my mind because it could interpret my human drivel. But now, AI can translate that drivel to image, audio, video, and even fully functioning apps. The AI's superpower of translation presents an entirely new paradigm of human-machine interaction.

Multimodal UI

The AI-driven multimodal shift opens up a world of possibilities in UI design. But what do these advancements mean for the existing UIs?

Implications:

Apps Becoming Multimodal: As AI becomes proficient in interpreting and responding through various modes, apps will follow suit. Expect a future where applications communicate via text, voice, image, and gestures.

More Natural Interactions: AI's proficiency in human-like dialogues creates a more intuitive and engaging user experience. Soon, interacting with digital devices could feel as natural as conversing with a friend.

Increased Accessibility: Multimodal interactions can make technology more accessible. By catering to different input and output modes, apps can cater to a wide range of user abilities, making digital experiences inclusive.

Adaptive Interfaces

As we move away from the one-size-fits-all paradigm, we enter the realm of adaptive interfaces.

I like to think of it as dark mode but for everything. Did you notice how apps sometimes know your preferences and adjust their theme to match? It is often labelled as "use device theme" or similar.

Now, imagine you have a preferred font, a preferred layout, and a preferred colour palette. Adaptive interfaces are not limited to looks. Content could change based on user preferences. Read the docs in the style of your favourite writer, and populate a website with imagery that you enjoy. The way you browse the web is viewed through your preferred set of glasses.

This is, however, only the user side. AI, coupled with vast amounts of data about user behaviours, can craft unique UI experiences for each user. If you are a business offering many different services, how great would it be if your website showed precisely what a specific user needs? No more, no less.

Implications:

Personalized Experience: From preferred colour schemes to the organization of information, every aspect of the UI could be adapted to align with the user's preferences and habits.

Contextual Adaptation: By understanding the context - like device used, time of day, location and even mood of the user, AI might adapt the UI dynamically. Imagine a UI that switches to voice output when it detects the user is driving.

Predictive Capabilities: A significant advantage of AI is that it can anticipate user needs. The UI of tomorrow might proactively adjust itself, providing the user with what they need before they even have to ask.

Representative Interfaces

Taking adaptive interfaces one step further, AI could serve as an extension of the user, becoming their digital representative and an intermediary between them and the software.

Agent basics

If you've never heard of AI agents, here's the rundown:

Agents act autonomously towards a user-defined goal.

To create a basic agent, one adds long-term memory and tools to an LLM.

The LLM can recursively prompt itself to move towards a goal.

Getting even closer to a human-feeling assistant, agents give AI some autonomy to complete tasks on your behalf. But why stop at one?

Multi-agent systems

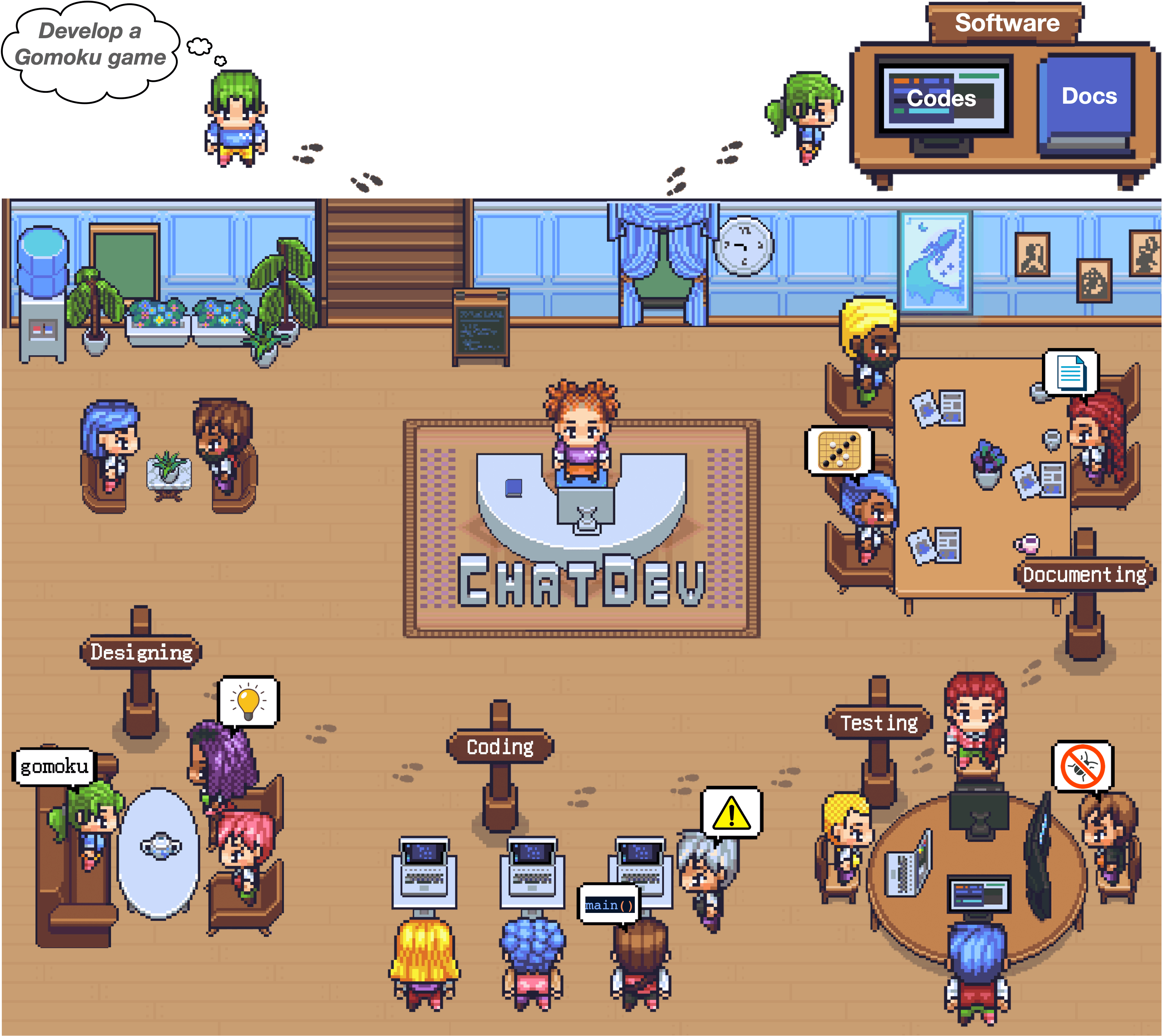

Projects like ChatDev allow you to compose a team of AI agents to form an organization that works to complete your objective.

If that sounds absolutely bonkers, that's because it is. You can recruit an army of AI specialists that work for you. Assigning different roles to every agent even improves the output because they constantly check each other's work from different perspectives. Even Microsoft is betting on this technology with AutoGen that just released. Keep your eyes out for that.

Copilots have given us a glimpse of this future by translating user intent into action. Agents take this to a whole new level. With agent systems like ChatDev, AutoGen and HyperWrite that can autonomously complete tasks using your browser, will we no longer need to interact with apps ourselves? Where does this leave developers?

Apps in a World of Agents

Landing pages are made for humans. Sure, agents are starting to understand how to use our web pages, but why build web pages if agents can just as quickly parse an API? Why spend time and money creating a web page when the user's adaptive interface already displays your info?

If you are developing a software service for agents, the only thing that matters is how well the agent understands you. If we strip away landing pages and fancy layouts, what is left is API design and docs.

In a way, this is quite freeing. Focus on creating new functionality and let AI determine how it could benefit the user.

On the other hand, website creation tools are becoming increasingly advanced and easy to use. Vercel's V0 creates beautiful React components from a text prompt. I doubt it will be long before a single text prompt and a link to the API can create an entire frontend. If full site generation is cheap and easy, there's no reason to not have content optimized for humans and agents alike.

I wonder if there will be SEO for agents 🤔 food for thought...

UI in a World of Agents

Since the beginning of this article, we have taken gigantic leaps towards our JARVIS dream. With our current terminology, Tony Stark's assistant would be categorized as an agent. Using agents is such a powerful idea that I see agents as the primary way we interact with the digital world in the future. But what will that interaction look like? What's the surface area between the human and the agent?

If you read this article from the beginning, your bells should be ringing by now. One of the next significant UI space changes will be designing for human-agent interaction. If agents are the primary way of using a computer, they would be more akin to an operating system than an app.

In contrast to a copilot, an agent may choose more broadly which tool to use to complete the user objective. It could even recruit other agents to complete a task. I suspect how much of this interaction will be known to the user will depend on our trust in these agent systems. There's no need to micro-manage agent systems if we know they're reliable. Speaking of reliability, who is building this?

Although we could see a completely new player in this race, I predict that incumbent computing platforms will be the first to integrate these features into devices we already use.

Meta has made a big bet on virtual and augmented reality and is now developing representative interfaces for users' digital avatars. In Meta's recent announcement, they say that you can train an AI to act and speak like you so that people can still interact with your digital clone, even if you are not online. That seems sorely needed based on the small number of people populating their VR worlds. But jokes aside, these are the first steps towards representative interfaces, and I'm excited to see what comes next.

They also showcased how AI integrates into Instagram and Facebook Messenger and their new AI glasses in partnership with RayBan, which gives us a glimpse of how multimodal AI interfaces can be applied to wearable tech. Apple is calling Meta's bet on VR and AR with its creepy-eyed Vision Pro Headset which will release early next year. Interesting to see here is how Meta and Apple create different interfaces for their respective headsets. UI for sunglasses that are taken out and about varies drastically from a Meta Quest gaming VR headset or Apple's media & workspace headset. Apple focuses on hand gestures, while gaming-focused Quest uses controllers. I am interested to see what multimodal AI will bring to the table.

Windows 11 also just launched a Windows copilot, integrated directly into the OS. It's still early, but we will soon see agentic interactions directly from the desktop.

Agents Summary

The potential of AI agents has just started to be realized in UI design. Here's a recap of the key ideas covered:

The Emergence of AI Agents: AI agents act autonomously towards user-defined goals, effectively becoming digital extensions of the users. They utilize AI's rich translation abilities to interact with the digital world on the user's behalf.

AI as Middlemen: Users may no longer need to interact with complex GUIs. Instead, their AI agent will interact with the software on their behalf based on their preferences and instructions.

Reduced Learning Curve: With AI representatives, users may not need to learn how to use complex software, saving time and making experiences more enjoyable.

A Universal Interface: Ultimately, AI representatives could become a universal interface. No matter what software or application a user interacts with, their AI can serve as their primary user interface, greatly simplifying digital interactions.

Towards Multi-agent Systems: Projects like ChatDev, AutoGen, and HyperWrite are pushing the boundaries by enabling composite teams of AI agents. Users can now create personalized cohorts of AI assistants with different specialities.

Changing a Developer's Role: As AI agents become more common, we'll likely see an emphasis on API design and documentation. The easier an agent can understand a service, the more accessible and effective that service becomes to users.

Shaping UIs in an Agent-centric World: As AI agents integrate into our digital interactions, there will be a new challenge in designing user interfaces tailored for human-agent interaction.

The Future of Consumer Tech: Incumbent tech giants like Meta already invest heavily in representative interfaces, indicating a movement toward more personalized, autonomous technology experiences.

Simplified Interactions and Enhanced Accessibility: AI agents could ultimately simplify digital interactions, providing a universal interface that adapts to the user's unique preferences and needs. The representative interfaces of the future hold the promise of a more accessible, intuitive digital world.

We're just starting on this exciting journey towards fully autonomous, AI-powered digital experiences. Hold on tight as things are about to get even more fascinating.

Conclusion

The evolution of AI technologies presents an exciting transformation for user interfaces. What started as simple levers and knobs has now branched into chat boxes, copilots, adaptive and representative interfaces. The multimodal abilities of AI are set to redefine how we interact with our digital world.

One thing is for sure: as AI continues honing its human-like communicative skills, the landscape of user engagement will undoubtedly undergo a dramatic shift. Our machines have begun to understand us better, and sooner than we think, they'll communicate like us, too, setting the stage for a future of intelligent actors directed by natural human expression.

The End

Thanks for reading :) This is my first time writing an idea-based article. I hope it was exciting and thought-provoking.

What novel interfaces have you seen spring up recently? How do you think AI will change our UIs? Let me know in the comments!